Split Testing Broadcasts Using Variants

Every broadcast can have any number of variants so that you can test out different aspects of a broadcast to compare their success. When a variant is first added to a broadcast, it is an exact copy of the original broadcast. It can then be tweaked to test items such as:

- Subject

- Format

- Content

- Layout

- Attachments

#V2 Exclusive features – A/B Statistical Significance testing and improved reporting. See below.

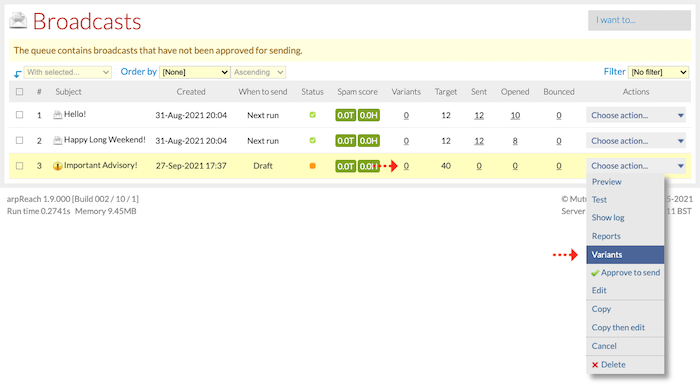

To manage the variants of a broadcast, choose the Variants option from the Choose action… drop-down menu or click the number under the Variants column.

“Use variants to test various aspects of broadcasts against each other”

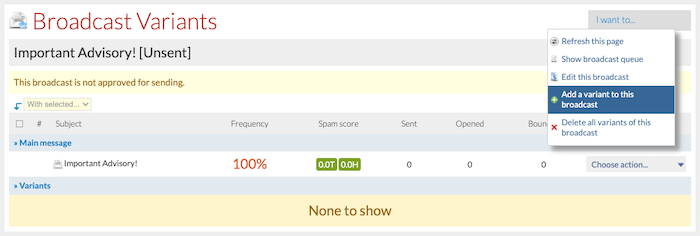

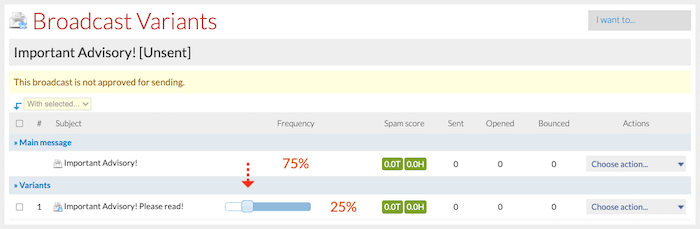

Once you have done so, this screen will appear:

To create your first split-test variant click on Add a variant to this message from the I want to… drop-down menu. This creates a copy of the original message as your first variant and opens up the message editor for the variant you have just created.

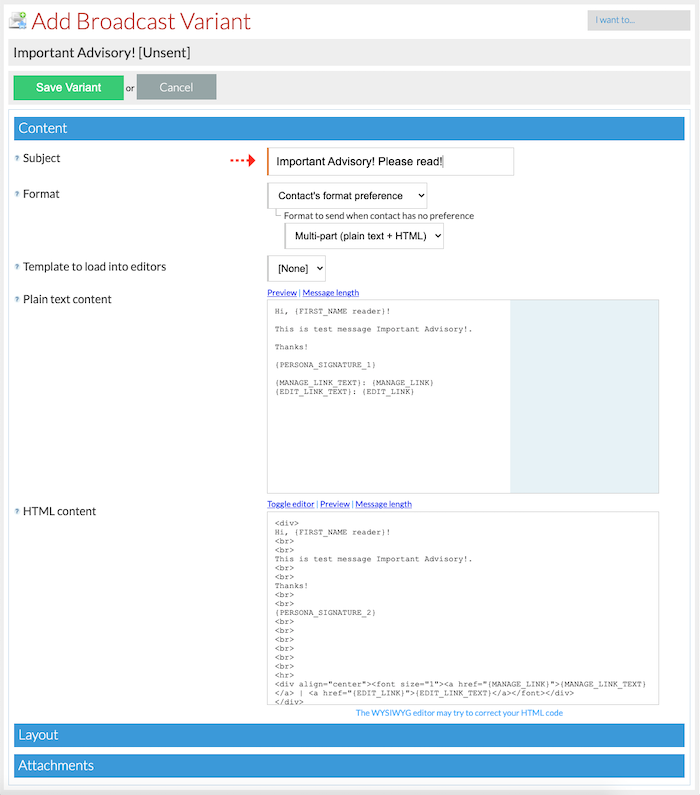

In the example image below, we changed the Subject line so we can split test the variant against the original.

You can change any aspect of the message. Once you have made your changes, click the Save Variant button.

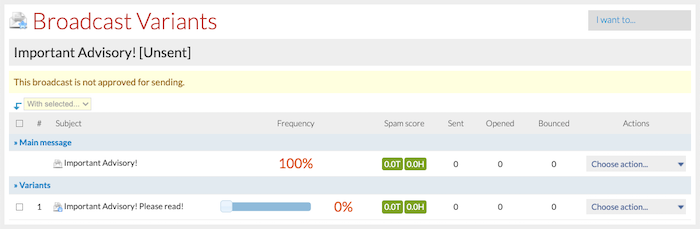

You will then be redirected back to the Broadcast Variants list screen, which now shows the variant you just created.

In the image below, take a look at the Frequency column.

You can change the percentage of contacts to be sent each message using the slider in the Frequency column, as pointed out below.

The system decides at random during the sending process which message will be sent to each contact.

#V2 -Exclusive – Statistical Significance

What does ‘Statistically significant’ mean?

‘Statistically significant‘ means that the result is unlikely to be explained solely by chance or random factors. i.e. you can rely on the result more than if it is not statistically significant. There are factors and considerations where this is not the case which are outlined below.

Set up your split tests in the same way as outlined above. The benefit of using #V2 will become apparent once your test has completed and you are looking at the results.

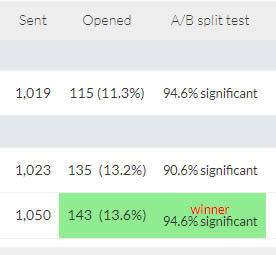

#V2 reporting will also display the words xx.x% Significant or put another way the level of confidence you can have in the winning result.

The winning test variant will be highlighted in green only when the statistical significance of the test result is over 90%.

In the image above, the level of confidence that the ‘winner’ will improve conversions, compared to the original message, is 94.6%.

A/B Testing

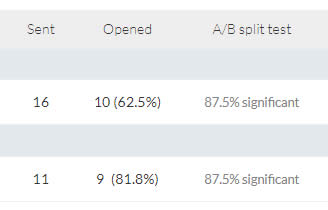

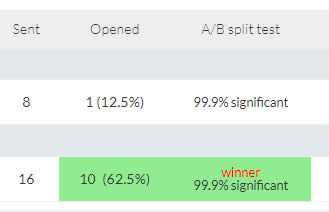

Below we can see two images that relate to simple A/B testing.

In the image above we see that the second option has the higher open rate (81.8%). While the open rate of option 2 is better than the original, the statistical significance of the result is less than 90% (87.7%). Compare that to the image below…

In the image above we can see that again option 2 beat the control (option 1) with an open rate of 62.5% compared to option 1 (12.5%). In this case the statistical significance was greater than 90% and #V2 highlighted the winner in green.

Note of caution: Any statistician will point out that there needs to be enough data (sends) for the test to be 100% reliable. Generally speaking the more you send the more reliable the result and the results of split tests sent to less than 250 contacts should be considered ‘indicators’.

Ultimately, a winning subject line is a winning subject line and providing you send another email with that subject line to like-for-like contacts, you can reasonably expect a winning subject line to be more effective. That expectation increases once you know that it is statistically significant.